GKE features

Volumes again

Now we arrive at an intersection. We can either start using a Database as a Service (DBaaS) such as the Google Cloud SQL in our case or just use the PersistentVolumeClaims with our own Postgres images and let the Google Kubernetes Engine take care of storage via PersistentVolumes for us.

Both solutions are widely used.

Scaling

Scaling can be either horizontal scaling or vertical scaling. Vertical scaling is the act of increasing resources available to a pod or a node. Horizontal scaling is what we most often mean when talking about scaling: increasing the number of pods or nodes. We'll now focus on horizontal scaling.

Scaling pods

There are multiple reasons for scaling an application. The most common reason is that the number of requests an application receives exceeds the number of requests that can be processed. Limitations are often either the amount of requests that a framework is intended to handle or the actual CPU or RAM.

I've prepared an application that is rather CPU-intensive. There is a readily compiled Docker image jakousa/dwk-app7:e11a700350aede132b62d3b5fd63c05d6b976394. The application accepts a query parameter ?fibos=25 that is used to control how long the computation is. You should use values between 15 and 30.

Here is the configuration to get the app up and running:

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: cpushredder-dep

spec:

replicas: 1

selector:

matchLabels:

app: cpushredder

template:

metadata:

labels:

app: cpushredder

spec:

containers:

- name: cpushredder

image: jakousa/dwk-app7:e11a700350aede132b62d3b5fd63c05d6b976394

resources:

limits:

cpu: "150m"

memory: "100Mi"Note that finally, we have set the resource limits for a Deployment as well. The suffix of the CPU limit "m" stands for "thousandth of a core". Thus 150m equals 15% of a single CPU core (150/1000=0,15).

The service looks completely familiar by now.

service.yaml

apiVersion: v1

kind: Service

metadata:

name: cpushredder-svc

spec:

type: LoadBalancer

selector:

app: cpushredder

ports:

- port: 80

protocol: TCP

targetPort: 3001Next we have HorizontalPodAutoscaler. This is an exciting new Resource for us to work with.

horizontalpodautoscaler.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: cpushredder-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: cpushredder-dep

minReplicas: 1

maxReplicas: 6

targetCPUUtilizationPercentage: 50HorizontalPodAutoscaler automatically scales pods horizontally. The yaml here defines what is the target Deployment, how many minimum replicas and what is the maximum replica count. The target CPU Utilization is defined as well. If the CPU utilization exceeds the target then an additional replica is created until the max number of replicas.

Let us now try what happens:

$ kubectl top pod -l app=cpushredder

NAME CPU(cores) MEMORY(bytes)

cpushredder-dep-85f5b578d7-nb5rs 1m 20Mi

$ kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

cpushredder-hpa Deployment/cpushredder-dep 0%/50% 1 6 1 62s

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cpushredder-svc LoadBalancer 10.31.254.209 35.228.149.206 80:32577/TCP 94sOpening the external-ip, above http://35.228.149.206, in your browser will start one process that will take some CPU. Refresh the page a few times and you should see that if you request above the limit the pod will be taken down.

$ kubectl logs -f cpushredder-dep-85f5b578d7-nb5rs

Started in port 3001

Received a request

started fibo with 20

Received a request

started fibo with 20

Fibonacci 20: 10946

Closed

Fibonacci 20: 10946

Closed

Received a request

started fibo with 20After a few requests we will see the HorizontalPodAutoscaler create a new replica as the CPU utilization rises. As the resources are fluctuating, sometimes very greatly due to increased resource usage on start or exit, the HPA will by default wait 5 minutes between downscaling attempts. If your application has multiple replicas even at 0%/50% just wait. If the wait time is set to a value that's too short for stable statistics of the resource usage the replica count may start "thrashing".

I recommend opening the cluster in Lens and just refreshing the page and looking at what happens in the cluster. If you do not have Lens installed, kubectl get deployments --watch and kubectl get pods --watch show the behavior in real time as well.

By default it will take 300 seconds to scale down. You can change the stabilization window by adding the following to the HorizontalPodAutoscaler:

behavior:

scaleDown:

stabilizationWindowSeconds: 30Figuring out autoscaling with HorizontalPodAutoscalers can be one of the more challenging tasks. Choosing which resources to look at and when to scale is not easy. In our case, we only stress the CPU. But your applications may need to scale based on, and take into consideration, a number of resources e.g. network, disk or memory.

Scaling nodes

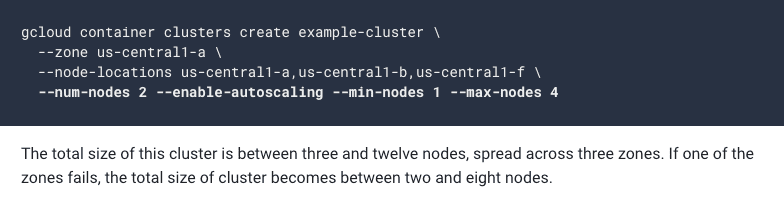

Scaling nodes is a supported feature in GKE. Via the cluster autoscaling feature we can use the right amount of nodes needed.

$ gcloud container clusters update dwk-cluster --zone=europe-north1-b --enable-autoscaling --min-nodes=1 --max-nodes=5

Updated [https://container.googleapis.com/v1/projects/dwk-gke/zones/europe-north1-b/clusters/dwk-cluster].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/europe-north1-b/dwk-cluster?project=dwk-gkeFor a more robust cluster see examples on creation here: https://cloud.google.com/kubernetes-engine/docs/concepts/cluster-autoscaler

Cluster autoscaling may disrupt pods by moving them around as the number of nodes increases or decreases. To solve possible issues with this, the resource PodDisruptionBudget can be used. With the resource, the requirements for a pod can be defined via two of the fields: minAvailable and maxUnavailable.

poddisruptionbudget.yaml

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: example-app-pdb

spec:

maxUnavailable: 50%

selector:

matchLabels:

app: example-appThis configuration would ensure that no more than half of the pods can be unavailable. The Kubernetes documentation states "The budget can only protect against voluntary evictions, not all causes of unavailability."

In addition to scaling to multiple nodes (Horizontal scaling), you can also scale individual nodes with VerticalPodAutoscaler. These help ensure you are always using 100% of the resources you pay for.

Side note: Kubernetes also offers the possibility to limit resources per namespace. This can prevent apps in the development namespace from consuming too many resources. Google has created a great video that explains the possibilities of the ResourceQuota object.